OOP in Big Data Systems: Designing Reusable and Scalable Components in Hadoop and Spark

Introduction

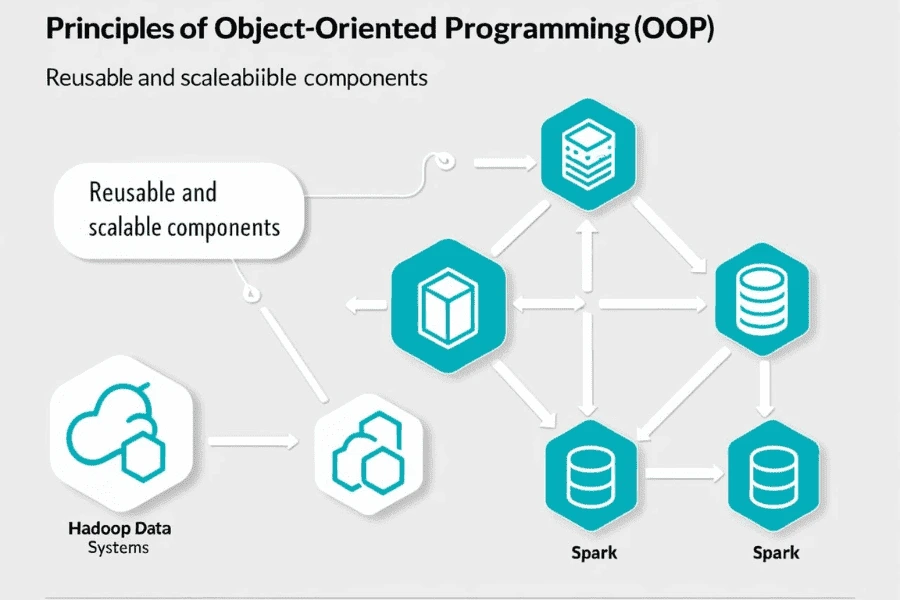

Big Data systems like Hadoop and Apache Spark are designed to process massive volumes of data efficiently. As data infrastructure grows more complex, engineers and data professionals are expected to write scalable, maintainable code that integrates smoothly across data pipelines. This is where Object-Oriented Programming (OOP) becomes critical. Applying OOP principles in Big Data systems enables developers to design reusable components, modular applications, and extendable analytics frameworks that can evolve alongside business needs. From creating custom data processing jobs to structuring entire data applications, OOP empowers better design in distributed computing environments.

The Role of OOP in Big Data Architectures

OOP allows developers to define real-world entities as classes, encapsulating both data (attributes) and behaviors (methods). In Big Data systems, this abstraction provides clear organization to complex logic—such as data extraction, transformation, loading (ETL), and distributed computations—while promoting reusability and reducing code duplication. As projects scale, modularity becomes key. With OOP, components can be developed and tested independently, improving system reliability and collaboration between teams.

Moreover, using class hierarchies and interfaces allows developers to extend functionality without modifying the core logic—an essential trait in dynamic environments where requirements shift frequently.

Using OOP in Hadoop-Based Systems

Apache Hadoop, built around the MapReduce programming model, often involves writing job configurations and data processing logic in Java—a language deeply rooted in OOP. Developers can create Mapper, Reducer, and Driver classes that implement specific interfaces provided by the Hadoop API.

By abstracting business logic into reusable components, developers can write flexible applications that process different data types or perform varying transformations by simply switching out or extending a class. For example, creating a base class for parsing input data can eliminate repetition across different job types.

Hadoop also benefits from object-oriented design when integrating with tools like Apache Pig, Hive, or HBase, where custom UDFs (User Defined Functions) can be encapsulated in classes and maintained as libraries.

OOP and Apache Spark: A Natural Fit

Apache Spark, written in Scala (a hybrid object-functional language) and offering bindings in Java, Python, and R, lends itself naturally to OOP practices. Spark’s architecture encourages the use of reusable components in the form of data processing pipelines, UDFs, and custom transformations.

For example, a data engineer can define an abstract class BaseETLJob with methods for reading, transforming, and writing data. Specific jobs (like processing sales or user logs) can inherit from this base class and override only the transformation logic. This approach leads to scalable, readable, and testable code.

Additionally, Spark MLlib, its machine learning library, is built with an object-oriented pipeline architecture—where stages like transformers and estimators are defined as classes. This design allows users to chain components into repeatable pipelines, supporting clean experimentation and productionisation.

Advantages of OOP in Big Data Workflows

Understanding Smart Grid Technology

Reusability: OOP enables developers to write code once and reuse it across multiple pipelines or jobs.

Modularity: Large systems can be broken into smaller, manageable classes, making them easier to develop, test, and debug.

Extensibility: Inheritance and polymorphism support evolving business logic without rewriting core functionality.

Maintainability: Code written using OOP principles is easier to understand, document, and hand off between teams.

Testing: Encapsulation allows for unit testing of individual components, even in distributed systems.

Best Practices for Applying OOP in Big Data Systems

Abstract common logic into base classes to avoid redundancy across different data jobs.

Use design patterns like Factory, Strategy, or Builder for job configuration, data transformation, and job execution orchestration.

Encapsulate state and behavior into single responsibility classes to adhere to clean code practices.

Separate I/O and business logic to enable easier mocking and testing of jobs locally before running them on clusters.

Document class hierarchies and data flow to ensure teams can understand and extend existing systems easily.

Conclusion

Incorporating Object-Oriented Programming into Big Data systems provides a strategic advantage in designing applications that are modular, maintainable, and scalable. Whether working in Hadoop’s Java-based environment or Spark’s flexible APIs, OOP offers the architectural discipline needed to manage growing data ecosystems. As data engineering matures into software engineering at scale, mastering OOP becomes essential for building long-term, production-ready Big Data solutions.

Active Events

Transition from Non-Data Science to Data Science Roles

Date: October 1, 2024

7:00 PM(IST) - 8:10 PM(IST)

2753 people registered

Transforming Development: The AI Edge in Full Stack Innovation

Date: October 1, 2024

7:00 PM(IST) - 8:10 PM(IST)

2753 people registered

Bootcamps

Data Science Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024

Full Stack Software Development Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024