Object-Oriented Programming in Data Science: Building Maintainable Workflows with Scikit-learn and Beyond

Introduction

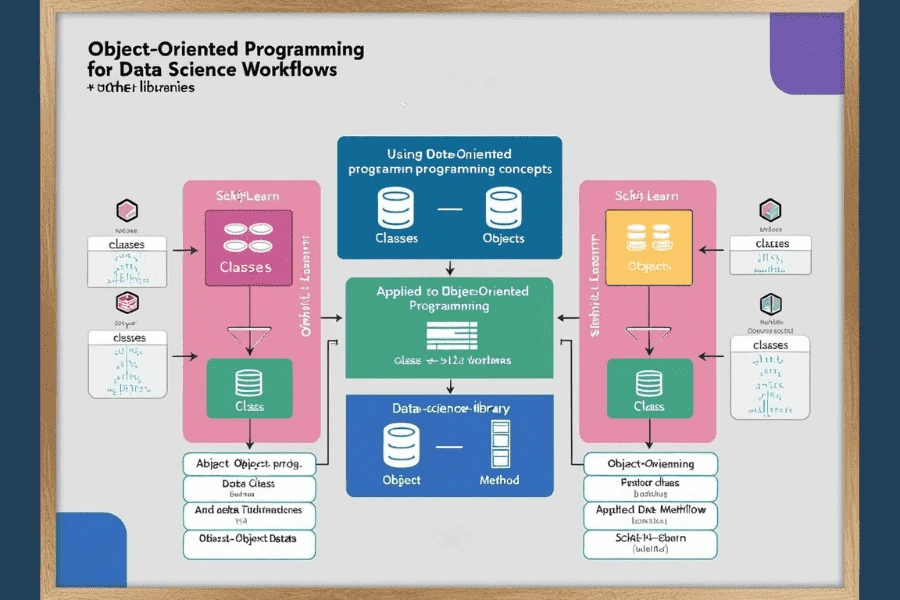

As data science projects grow in complexity and scale, maintainability and reusability become just as important as model accuracy. Object-Oriented Programming (OOP) provides a robust foundation for building structured, scalable, and modular workflows. In popular libraries like Scikit-learn, OOP principles are at the core of pipelines, model classes, and transformers. By using OOP, data scientists can create reusable components, abstract complexity, and enable collaboration across teams—leading to faster iteration cycles and more reliable production systems.

The Role of OOP in Scikit-learn

Scikit-learn is a prime example of OOP applied effectively in data science. Every algorithm, transformation, and pipeline component in Scikit-learn is encapsulated in a class. Estimators (e.g., LogisticRegression, RandomForestClassifier) and transformers (e.g., StandardScaler, PCA) implement a consistent interface with .fit(), .transform(), and .predict() methods. This design encourages modularity and interoperability—making it easy to mix and match components while preserving a consistent structure. Through inheritance and abstraction, Scikit-learn ensures that every new algorithm follows the same design rules, which simplifies debugging and testing. Moreover, by treating models as objects, developers can easily serialize them, track their state, or include them as part of a broader pipeline without losing interpretability.

Encapsulation and Reusability in Custom Workflows

OOP allows data scientists to go beyond pre-built libraries by defining their own objects for domain-specific tasks. For example, a data scientist working in finance might create a CreditScoringModel class that encapsulates data preprocessing, feature selection, model training, and evaluation in a single object. This encapsulation allows for cleaner code and easier reuse across multiple projects or datasets.

Additionally, having well-defined classes makes it easier to onboard new team members, as they can interact with the system through intuitive methods and attributes instead of navigating disjointed scripts.

Inheritance for Workflow Generalization

Inheritance supports the creation of generalized, adaptable workflows. Suppose you define a base class BasePipeline with common functionality such as data loading, logging, and model evaluation. You can then extend this class into ClassificationPipeline and RegressionPipeline, each with domain-specific logic while still adhering to a shared structure. This kind of design not only reduces code duplication but also simplifies experimentation. You can try different models or techniques with minimal refactoring, accelerating development without compromising maintainability.

OOP for Pipeline Automation and Experimentation

OOP works seamlessly with automated experimentation and model tracking systems. By defining experiment logic in objects like ExperimentRunner, you can control the entire workflow—from data preprocessing to model evaluation—through configuration or dynamic method calls. This object-level control simplifies logging, versioning, and parameter tuning. Integration with tools like MLflow, DVC, or custom dashboards becomes more straightforward when experiments are encapsulated in objects that expose structured data and logs.

Extending Beyond Scikit-learn: OOP in Modern ML Ecosystems

While Scikit-learn popularized OOP in Python-based data science, many other frameworks follow similar principles. Libraries such as PyTorch and TensorFlow allow custom model classes, loss functions, and data loaders. Tools like FastAPI or Streamlit benefit from OOP when integrating ML models into production environments. By maintaining a clean object-oriented architecture, data science projects can evolve into full-fledged, maintainable software systems capable of handling changes in data, business logic, or deployment infrastructure.

Conclusion

Object-Oriented Programming equips data scientists with the tools to build clean, modular, and scalable workflows. By leveraging OOP’s principles in libraries like Scikit-learn—and extending them to custom codebases—teams can ensure maintainability, promote collaboration, and support production-readiness from the very beginning of a project. As data science continues to mature as a discipline, adopting software engineering best practices like OOP will become a competitive advantage, not just a convenience.

Active Events

Best Tips To Create A Job-Ready Data Science Portfolio

Date: October 1, 2024

7:00 PM(IST) - 8:10 PM(IST)

2753 people registered

Transforming Development: The AI Edge in Full Stack Innovation

Date: October 1, 2024

7:00 PM(IST) - 8:10 PM(IST)

2753 people registered

Bootcamps

Data Science Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024

Full Stack Software Development Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024