Deep Learning in Google Lens and Assistant: Vision and Voice Models in Action

Introduction

Artificial Intelligence (AI) is no longer just a futuristic concept it’s an everyday reality, seamlessly integrated into our smartphones, wearables, and digital assistants. At the heart of this transformation are tools like Google Lens and Google Assistant, which use advanced deep learning in mobile apps to offer intelligent visual and voice-based interactions. These applications utilize AI-powered visual search, voice assistant deep learning, and multimodal AI applications to provide real-time, personalized services.

The Role of AI in Google’s Ecosystem

Google’s AI ecosystem is built on robust machine learning infrastructure, including platforms like TensorFlow, TPUs, and advanced transformer models in NLP such as BERT, PaLM, and Gemini. These technologies fuel services like Google Search, Translate, and, notably, Lens and Assistant.

With AI in Google products, users benefit from real-time information processing, contextual understanding, and highly accurate predictions. Whether you’re pointing your phone at a sign for translation or asking a question out loud, deep learning ensures your experience is intuitive and helpful.

Deep Learning Foundations

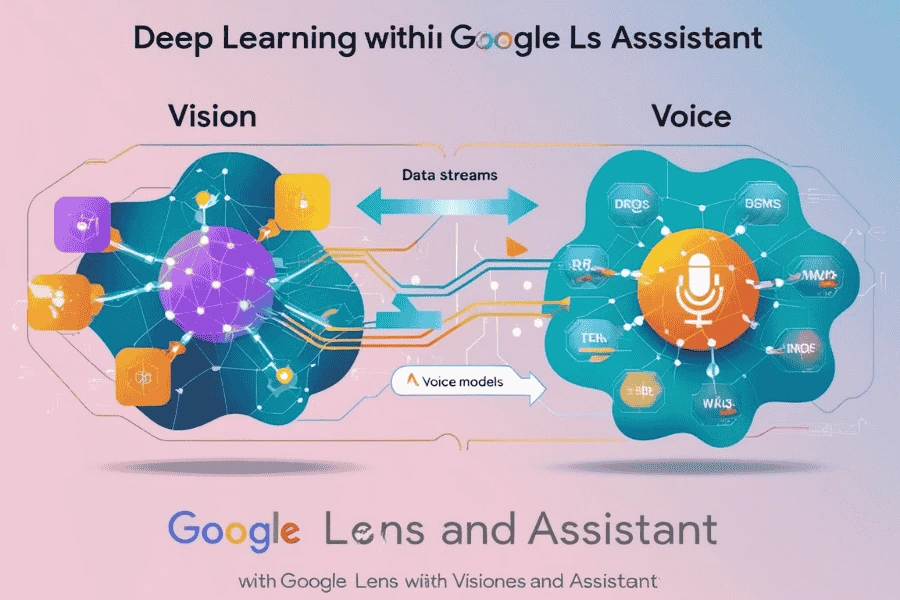

Behind the scenes, Google Lens features and Google Assistant voice recognition rely on deep neural networks. Vision tasks are powered by Convolutional Neural Networks (CNNs) and Vision Transformers, enabling object and text detection with precision. Voice tasks are managed by RNN-T, Conformer, and other models designed for high-efficiency real-time AI inference.

These models are optimized for edge computing in AI, running on smartphones and smart devices with minimal latency and no need for constant cloud communication. This not only improves speed but also ensures greater user privacy.

Google Lens: Vision Models in Action

Google Lens object detection uses CNNs and transformers to understand images. It can identify animals, plants, products, text, landmarks, and much more. Its AI-powered visual search makes it possible to take a photo and get immediate, context-rich results.

Lens leverages computer vision in mobile apps to perform actions like real-time translation, barcode scanning, and product identification. It also uses on-device AI processing, allowing many of its features to work offline giving users fast, secure access to visual intelligence even without internet connectivity.

Google Assistant: Powering Voice and Language

Google Assistant harnesses voice assistant deep learning to interpret speech and generate natural responses. Its wake-word detection and speech recognition models run directly on the device, ensuring quick response times and enhanced privacy.

Natural language understanding is driven by powerful transformer models in NLP, helping the Assistant understand context and intent. When a user speaks, voice models in Google Assistant decode the message using neural networks optimized for fast and accurate real-time AI inference. Speech synthesis tools like Tacotron and WaveNet generate human-like responses, making conversations smoother and more natural.

On-Device AI: Fast, Private, Personalized

One of the biggest innovations in these services is on-device AI processing. This allows models to run locally, improving performance and ensuring data doesn’t leave the device unnecessarily. Features like wake-word detection, quick transcription, and even Google Lens features like OCR can function without a network connection.

With on-device learning benefits, the Assistant and Lens adapt to individual users learning preferred settings, voice patterns, and behavior all while preserving privacy. This is further supported by edge computing in AI, which helps reduce dependence on cloud services.

Privacy in Voice Assistants and AI Applications

Privacy is a growing concern for AI users. Google addresses this by implementing federated learning and differential privacy training models without ever exposing personal data to central servers.

Privacy in voice assistants is maintained through tools like Voice Match, which personalizes responses based on the speaker’s voice, and user settings that control what data is stored or deleted. The goal is to offer smarter AI without compromising security.

Multimodal AI: The Next Step Forward

Multimodal AI applications combine vision, voice, and text input to create seamless user experiences. For example, users can take a photo with Lens and immediately ask the Assistant a question about it, receiving a voice response that references both visual and verbal inputs.

These kinds of experiences are powered by unified models that understand multiple types of data at once. Innovations like Gemini promise even more integrated interactions, making it easier to navigate the world using a single, intelligent assistant.

Conclusion

The integration of deep learning in mobile apps like Google Lens and Assistant is reshaping how we interact with the digital world. Whether it’s AI-powered visual search, Google Assistant voice recognition, or Google Lens object detection, these tools demonstrate the enormous potential of vision models in action and voice assistant deep learning.

Thanks to innovations like on-device AI processing, edge computing in AI, and enhanced privacy in AI applications, we now have smart, fast, and secure tools that understand and respond to us better than ever before. As multimodal AI continues to evolve, the future of human-machine interaction looks more seamless, intelligent, and personalized.

Active Events

Data Scientist Challenges One Should Avoid

Date: August 14, 2024 | 7:00 PM (IST)

7:00 PM (IST) - 8:10 PM (IST)

2753 people have registered

Your Data Science Career Game-Changing in 2024: Explore Trends and Opportunities

Date: Aug 08, 2025 | 7:00 PM (IST)

7:00 PM (IST) - 8:10 PM (IST)

2811 people have registered

Bootcamps

Data Science Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024

Full Stack Software Development Bootcamp

- Duration:8 weeks

- Start Date:October 5, 2024